Introduction

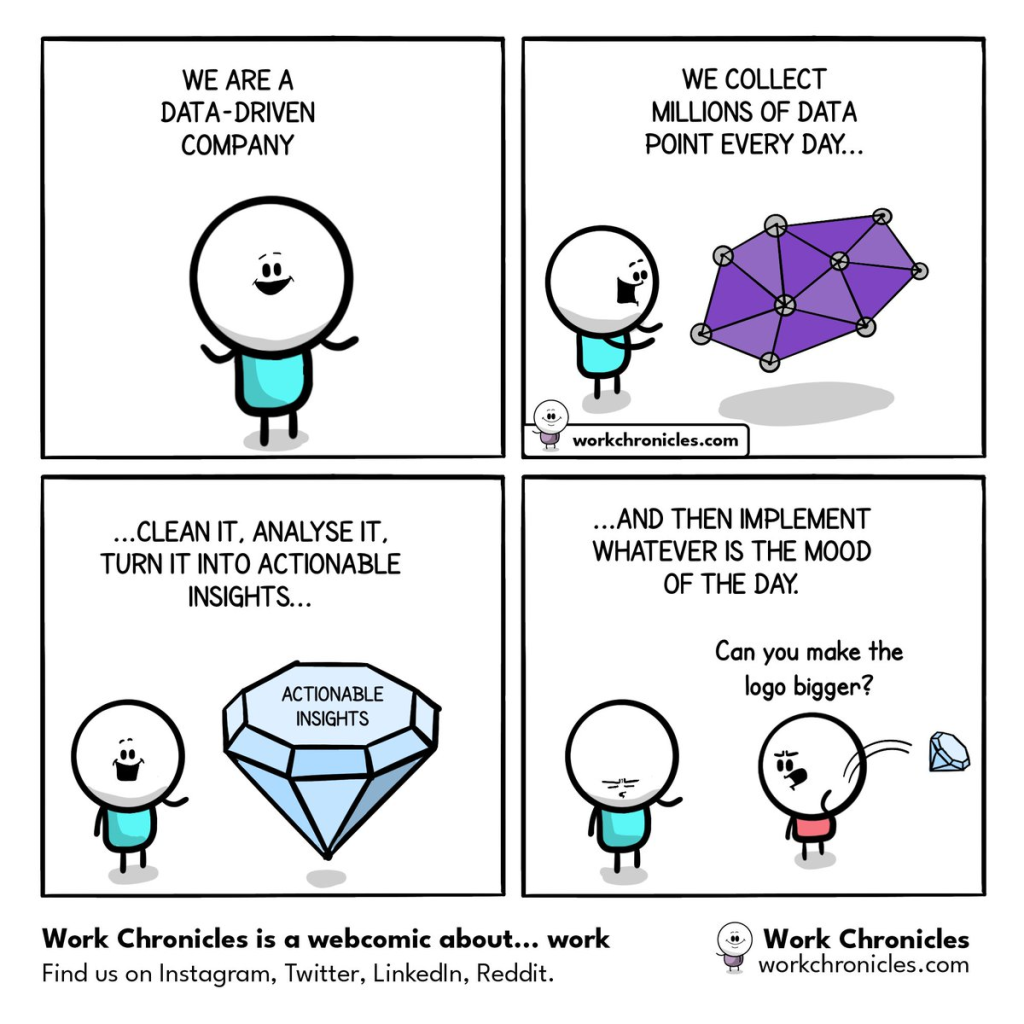

In recent times, the likes of the CTO have stated that we need to use modernised technology and tools. One aspect that they love is software that produces statistics/metrics that we can then judge improvements over time.

When we buy software licences for such a tool, there is always hype among certain groups of people who will volunteer to take ownership and work on implementing such software (installation, training, creating a “best practices” process), and will take any opportunity to highlight it to the managers.

So the “soup of the day” is a tool called Datadog which seems like a very powerful tool and has all kinds of integrations. I found this “jack-of-all-trades” approach was difficult to really understand what Datadog was for, and why it was different from what we had before. I knew we had Dashboards that showed which servers were running, their processor/memory usage, and which versions of our software was installed, and more. Datadog is used for this purpose too.

Jargon Sales Pitch

One reason why it is difficult to understand is that Datadog’s webpage spouts loads of jargon, but also internally, managers love spouting jargon too. Here is what one DevOps member said about Datadog (warning – these next paragraphs contain a lot of jargon):

“As our organisation continues to grow and evolve, it is essential that we have a comprehensive and centralised observability solution in place. Currently, we are using multiple disparate siloed monitoring tools, which not only is inefficient but also hinders our ability to identify and resolve issues promptly. This leads to decreased visibility and a lack of agility in our operations.

Datadog observability provides a unified platform that consolidates all our monitoring, logging and tracing tools into one solution. This not only reduces the complexity of our monitoring landscape but also gives us a single source of truth for all our operational data. By implementing Datadog observability, we will have the ability to quickly and easily identify and resolve issues across our entire infrastructure, reducing downtime and improving overall service levels.

Moreover, Datadog observability offers the ability to deploy configuration changes to the Datadog agent with agility, which is critical in a fast-paced and dynamic environment where changes to our infrastructure occur regularly. With Datadog observability, we will be able to quickly and easily make updates to our monitoring configuration, ensuring that our monitoring remains up-to-date and relevant at all times.

With a pre-approved change, it will be easier for us to leverage the 600+ integrations that we can configure to further enhance our current infrastructure observability, root cause analysis and incident mitigation. This will allow us to gain greater insights into our operations, improving our ability to identify and resolve issues before they become critical.

In conclusion, authorisation and creation of a Datadog pre-approved change will bring numerous benefits to our organisation, including increased visibility, improved agility, and reduced complexity. This solution will help us effectively monitor and manage our infrastructure, ensuring that our operations run smoothly and efficiently.”

DevOps Engineer

That really sounded like he was saying the same thing multiple times and was really emphasising the speed. I think a concise statement is that “Datadog is one software product for monitoring, and can replace many metric tools that we currently have”. So I would imagine it should be cheaper (paying one licence rather than several), and since it is all in one place – probably easier to create new dashboards.

Jargon From The Docs

On their page, Collect SQL Server Custom Metrics, they show how you can run a custom query involving a person’s age. Isn’t that a terrible example? This would run every minute (or whatever it is configured to do) and you will create graphs from this. Without good examples, it’s hard to understand how or why you would use this feature. Other problems are due to excessive jargon.

“In v6, DogStatsD is a Golang implementation of Etsy’s StatsD metric aggregation daemon. It is used to receive and roll up arbitrary metrics over UDP or Unix socket, thus allowing custom code to be instrumented without adding latency.”

Datadog

“Enabling JMX Checks forces the Agent to use more memory depending on the number of beans exposed by the monitored JVMs.”

Datadog

Official Training

Members of the Network team, DevOps, a few managers, and some volunteers (who want managers to look upon them favourably) – signed up to a session with official Datadog training staff. These sessions were recorded, and I watched these and made a few notes; although it was just riddled with jargon and hard to know what anyone was talking about.

“Datadog Expert Services, or DES for short, is a set of “guided hands-on keyboard” pair-programming sessions. These collections of sessions, collectively known as an engagement, are time boxed and specifically designed to enable you to get the most out of Datadog while adhering to best practices. In this session, our team will work you to configure and deploy the Datadog Agent. This includes deployment in a standard, or Kubernetes containerized environment.”

Datadog

There were 2 courses that it seemed that these people were enrolled on

| Name | Time | Cost |

| QS-INF course | 2 Weeks + 5 Sessions, Curated | $15k |

| QS-INF-LOG course | 3 Weeks + 8 Sessions, Curated ** | $25K |

Training cost is bonkers isn’t it? Once you have paid all that, then it pushes you toward the sunk-cost fallacy.

One of the Instructors asked what our infrastructure was.

“we’ve got resources and infrastructure in Azure, with a bias towards AWS, then we have on-prem; most of it is Windows Server. A combination of 2012…and onwards. 2016, but mainly 2019 as well. They also run on Windows HyperVisor, and also VMware – so they are virtual machines. But actually, we also have physical servers as well.”

deployment dude

Basically, we just made it up as we went along and got all the things! It sounds like a similar thing was done with the monitoring, because the deployment dude said we have “16 or 17 on-prem monitoring tools, as well as custom Powershell scripts to generate some data to monitor”

The Datadog Instructor explains that we have to log tickets if it is outside our “engagement time”. They will reply when they can but there’s no set time-frame.

“That’s fine with us, we log enough tickets already, so that’s fine. I think we will welcome that.”

DevOps Engineer

It’s almost like we were taking any opportunity to slag our company off.

No Going Back

Good news everyone!

The DevOps engineers with support from the Architecture Team have levelled up our Live datacentres!

How? With estate wide deployment (completed Friday evening) of the incredible, uber-awesome full stack monitoring SaaS Datadog!

If you’re aware of Datadog’s capabilities, effortless integration and out-of-the-box features you’ll appreciate how monumental this is.

For the uninitiated, Datadog in a slick, AI driven, intuitive UX allows full stack monitoring of servers, databases, tools, services, containers, et al.

Effortlessly switch from viewing the entirety of all network traffic to drilling down into individual requests, logs, payloads, processes, you name it, in real-time.

Going forward we envisage significant improvements to our reaction and mitigation of all types of incidents, minor to major!

We are currently trialling access – To request access please join our Slack channel.

Stay tuned as we have more exciting stuff coming as a result of our DevOps strategy!

Watch this space!

DevOps Engineer

Web-based Demo

One team put together a small web-based app and presented a demo to the department to promote Datadog, and obviously, take the opportunity to look amazing in front of the management.

The team lead was trying to show a feature called “Cumulative Layout Shift” but didn’t explain it. He made out it could track how many parts of the website load – so you know how sometimes you load a webpage and might see some text, then an image suddenly pops on screen, then some adverts, and often it causes the layout to change, then some more adverts appear, possibly changing the layout once more? It’s not a smooth user experience and causes a lot of jerks if the user tries to navigate the page before it has fully loaded. So how does Datadog track that? What is tracking it? and wouldn’t that mean there are multiple server calls to Datadog to log it? The web page is already slow, so why would adding extra server calls back out make it better? I can’t see how that can be performant, especially when you have thousands of users. Isn’t this process logging an insane amount of trivial data over time? I think I was left with way more questions than answers.

He also said it can track time spent on a particular web page, view count, error count, action count, frustration count (he claims Datadog tracks clicks out of frustration. How?). When people are already worried about the amount of monitoring/tracking/surveillance with the likes of tracking cookies – and then websites can track you to this granular scale with Datadog; it is a bit worrying isn’t it!?

Everyone should use Datadog

In following department meetings we were told by the CTO that all teams would eventually use Datadog and we need to increase the amount of monitoring, and to do it quickly to take advantage of the benefits of the tool.

My manager wanted our team to create a Datadog dashboard. Even if it wasn’t that useful, she wanted to be among the initial users – probably to look good to her manager.

I asked one of the smartest developers if it was even suitable for my team. He was looking into creating a dashboard for his team, but his team had an API that third-parties could use and it was prime for this kind of monitoring.

He was a bit vague though:

“You could create a custom metric for it. But I wouldn’t be too sure. I’m probably going to use custom metrics for “#messages per APP per minute” sort of thing. But I can get all that from my Logs/Traces. You’d have to have something pulling that data from the main databases which would involved pushing it to Datadog.”

Principal Developer

I asked other people that were using it, and people just kept on saying they weren’t sure, or maybe others have done it.

“We’re making heavy use of Datadog for our new software and I believe it’s also being used in other areas too. It’s incredibly powerful and provides a huge amount of detail. Getting the most out of it is important and also building some standards seems like a good idea. Do we have any thoughts around how we ensure we lead the way with this and get some standard/learning/documentation in place?”

Developer

No one can give a straight answer when it comes to this stuff. People are like “EVERYONE is using Datadog“, then when you ask about it in more detail, they are like “well SOME teams are using Datadog“, then when you ask more people, they are like “there are some metrics but not quite the ones you want“

Performance Problems

I asked my Software Architect friend (who seems to know everything) if Datadog is as flawless as people were implying. My intuition was thinking it cannot have zero disadvantages.

Me Won't Datadog just cause performance issues if we start monitoring everything? Mark yep, or run while patching is in progress and block access to the Database/tables, which has already happened. Running ad-hoc scripts is a fairly bad idea Hosted had to run patching twice the other week, which pushed us out of our Service Level Agreement. Me: this juicy gossip keeps quiet doesn't it Mark yes because Datadog is a massive success and we paid lots of money for it

Technical Director

Recently we hired a “Technical Director”. He asked how Datadog was coming along and if we can highlight any issues so he can get involved. This prompted John to go on a rant. The TLDR of this section is that “Software Developers don’t know about infrastructure of the Live Production environment.”

I think one of the company’s biggest challenges is how many products we have, and how diverse they are. We have no real standardisation due to a number of different factors, not sun-setting old services, not tackling tech debt, products that were developed by other companies and came to us via acquisition etc..

As a result, I think it’s difficult for us to template things out such that it can work for multiple people.

Realistically, each team for each product needs to look at how their product works, how it’s used, what tech it’s built on, and build a solution that works for their product. And I think one of the biggest challenges at the company is the ‘DevOps wall of confusion’ isn’t just a normal wall, it’s a Trumpian 15 foot high one with razor wire. Lots of products have dev teams (assuming they have one at all!) with little to no exposure or knowledge of how production works and what it looks like. For so long dev teams were told they had no role in production, no need to access it and were kept locked away from it

For reference, I used to think like that. I’ve been here 15 years and I have been part of the mindset in the past. It’s changing, and I’m happy to be one of the people pushing for that change, breaking down that wall of confusion. But that’s one of your biggest hurdles – is that people don’t know what to monitor in production because they don’t know what it looks like, and trying to monitor it by just copying a template that worked for somebody else, but doesn’t work for their solution isn’t a way to solve it

The key to unlocking Datadog for me, is to get people to have visibility of production, to understand how it’s used and what it looks like, and then start to work out what metrics are important, and what “normal” looks like so we can alert when we deviate from that

I can talk for hours about this, my team has one of the best observabilities out there, and had it before Datadog came around. If you want to have a chat, happy to have a discussion about what we can do.

I may have painted a somewhat negative opinion above, and I agree that there are things that we can improve. But we can’t expect some pretty Datadog dashboard templates to solve the historical problems that have meant we have lots of live services in the business with nobody who understands where they are or how they work and crucially expect Operations 24/7 to be able to magically pick up the pieces and fix it by themselves when it falls apart.

Yes, the company has a long history of developing a solution, moving the team that developed it off onto a new project, and leaving that solution behind. Combine that with a massive wall of confusion between Dev and Hosted, you have hosted running a bunch of servers that they have no idea what they do.

Case in point right now, the “Login and Identity service” is in the main data-centre, and we also have one in the DMZ that was built for the Mobile app, but nobody is quite sure what the main one is for. I have some notes that indicate it was built for the Connect app, but Connect doesn’t use it. Yet still that production sits there unused with nobody sure why it’s there.

You’ll find a team that has maybe done work in the past on Appointments, maybe even recently. Are they currently working on Appointments? do they have any knowledge or visibility of production? is it even on their radar that they should be monitoring the performance of it?

This goes deeper than just dashboard templates, it’s a company culture problem

John

Anomaly detection works well if the metrics are predictable for different periods of a day. It’s not a “AI” as we thought when I tried it out, it’s more of a fancy algorithm than machine learning.

I found with XMPP that method would work OK for Mon-Fri, then the alert will trigger all weekend because traffic wasn’t as high those days.

Lee

Scheduler

I was added to a group chat where the team was discussing how to use Datadog on our “Scheduler”. It sounds like an easy task, but there’s way more nuance and technicality to it. The main problems we have with the scheduler is that:

- some jobs fail and then wait to be reset,

- some jobs run but get stuck (I think most cases the scheduler isn’t informed that the job has finished, so it fails to schedule the next run).

The TLDR of this section is that: there is a lot of junk data (and I mean A LOT) and reporting on these figures can be somewhat misleading because failed jobs for organisations that no longer exist aren’t a problem (although we should stop them from running since they are obsolete).

John Surely we need something that runs that shows us: a count of jobs in Error Status A list of jobs with a status of Running, Long Running Jobs Matthew We'll want to take into account the normal running time of a particular job. We don't want to be alerted about a job that usually takes 2 hours to run and it has only been 1 hour. We'll get to ones that get stuck quicker if they usually take a minute to run Dave Someone should run some queries against live now, and get a picture that looks correct. Matthew We also want the data to be meaningful otherwise we'll be back to where we are now - where we don't know what's actually working and what isn't. There's a balance to be had here Christian Can we summarise the Key Performance Indicators that will cause an action that needs to be performed? These become multiple metrics IMO, that add together to give better context John 1. Job queue building up 2. jobs failing and not being reset 3. jobs getting stuck Matthew • Large numbers of jobs not running when they should • Jobs stuck in running beyond their normal running time • Mass job failures • Mass job queues (this has the potential to false flag when workload is high) John There's a bug / unexpected behaviour where the scheduler can fail to update the database with the result of a job and the table shows it in status Running. Scheduler logic queries the tables for what is / isn't running. Leaving it to make decisions that it can't do stuff because a job is "running" when it in fact isn't. Matthew If this is a bug, the smartest thing to do after the monitoring piece is to fix the piece of software causing it surely? John the secret to any good bug report is reproduction steps, and it's not an easy one to reproduce You mentioned you'd had one get "stuck" recently. Do we know how to reproduce that behaviour again on demand? Matthew "Just let the scheduler do it's thing and wait" is the only way we know how to replicate these John hence why any developer would struggle to fix it because it's difficult to know where to look if you can't reproduce it Christian "Treasure what you measure" or "Measure what you treasure". Simple counts and alerts will likely get us to what we need very short term which is to prevent or proactively manage / reduce potential for a Major Incident. Matthew I've got some initial queries together for this that could be used to alert on if the numbers get too high. I'd appreciate someone who knows TSQL to have a look and to suggest any improvements to the data being returned. John the 3000 + jobs in error is scary Do we need to filter that list by only jobs that we know get automatically reset by the Hosted DBA agent jobs? Matthew Maybe, I did think that but I also thought that we should really know how many jobs are actually in error John I know that list in itself is a problem. But I think all Domains are going to have a high failed count and it's difficult to know if there are important ones in that 3000 + count Matthew We shouldn't alert on that metric, hence the one to track how many in error for the last hour The scheduler is a massive mess and that 3000+ count suggests we have a fair bit of clean-up to do. John the only suitable metric I can think of for "important' is ones that the Database Administrators already deemed as important and created automated resets for. Matthew I could add an additional "Important" row to the general stats that includes those (or excludes any that aren't those) Need that info from the Database Administrators though John Do we maybe need a couple of groups rather than just 1 "important" group Matthew I'd rather split the jobs and call out their names though, rather than pile them into one huge count if we're doing that Let's get the data in Datadog first and see what normal looks like and tune alerting accordingly JobTypeIDs aren't consistent across the estate by the way, so you'll have to match on JobTypeName with a join to the JobType table <Image of 2048 Ready jobs. 47 Error> John Interestingly those 47 jobs are haven't run in years. some of them last ran successfully in 2016 but we're resetting them every day to try and run and constantly failing (job run count of 271,280) Matthew Hence my comment about a lot of clean-up - I'm willing to bet these are trying to run for closed Orgs, or orgs that have moved endpoint John Each Domain will probably need work to get rid of all the false alarms I know when I checked one domain there were 40 + jobs that had never ran and were just constantly being reset Maybe an idea to simply disable these and change the script to add & Enabled = 1 to the filter so you count only enabled jobs? That should help remove the false positives you know about - then you can actually alert if the value goes above 0 for jobs in error Paul We are assessing whether the best approach to reduce the number of scheduler incidents is to deliver the new scheduler with improved logic and Datadog integration which will take time. Or support the integration of Datadog with the current scheduler. Matthew If it's the former, should we still do the latter anyway until the new scheduler logic is in place? I suppose what I'm trying to ask is will the time-frames for implementing the new logic be quick enough to satisfy the urgency of monitoring the scheduler? Paul Yes agreed, we have just reviewed the last 9 months of incidents and having Datadog reporting would have given us the insight to avoid a number of these. John As well as adding an "enabled=1" filter Matthew, do you think it's worth adding a runcount > 0 filter as well to avoid counting jobs that have never ran? For the sample Domain I looked at, every priority job in error had a run count of 0 showing they've never worked. Adding this would bring that result down to 0 which makes it much easier to then set an alert if that goes above 0 Matthew I thought about that, but that will mask errors with jobs that should run but haven't. We'll want to see those. New job types as well, for example John going to be a hell of a job tidying up all the crap scheduled jobs in the scheduler AND setting up and calibrating monitoring at the same time My thoughts were to filter those out for now, then look at those crap jobs later Matthew Yep, it is, but we can't ignore the mess as it won't go away. A lot of work disabling jobs will be needed to get the overall stats query to show nice figures. We shouldn't shy away from them looking terrible though. I don't believe in fiddling figures to make things look nice when it comes to monitoring The other queries that show failures and stuck/running jobs for over an hour will help with spotting immediate issues though One particular situation to take into account is the longest-running job we have is 8 hours. We can take care of that in Datadog with trends and anomaly detection to tell us when more jobs than the expected ones are stuck in a status for longer than an hour. Similarly, we can use that same alerting method to warn us when the numbers on the overall stats aren't within usual parameters. Change detection is also a good measurement to use here too. We don't necessarily have to use traditional methods of alerting as soon as a value is over X John that sounds to me like a case of another metric count of scheduled jobs running more than 1 hour where job type is NOT “expect long-running jobs”

Performance Problems Part 2

Note: If the min_collection_interval is set to 30, it does not mean that the metric is collected every 30 seconds, but rather that it could be collected as often as every 30 seconds. The collector tries to run the check every 30 seconds but the check might need to wait in line, depending on how many integrations are enabled on the same Agent. Also if the check method takes more than 30 seconds to finish, the Agent skips execution until the next interval.

Datadog

It seems that for custom sql metrics, you can only specify a single time frequency to run ALL the queries. So if one team creates a query they want to run every minute, and another team wants to run every hour – you can’t.

One team wanted to run a long-running query, but since the first team had set the queries to run every 60 seconds, then this long-running query wasn’t possible.

In a similar fashion, we also anticipate problems if the total time of all queries exceeds this 60 second limit which we will soon do with only several queries.

Another problem that we found is that the time you set is just a guide. So Datadog could actually run it twice in the 60 second period. Then when it comes to creating the dashboard, you have to be careful that you don’t end up counting the data multiple times. Some teams were seeing decimal numbers on their charts when counting data with only whole numbers!

The possibly crazy workaround

John

I think a good workaround would be to have the data refreshed hourly, and placed in a separate database somewhere then have that separate database queried every 60 seconds by Datadog. If it’s separate to the Live estate – it should reduce the risk. Needs thought putting into how you would pull those stats into a database hourly however. Need a SQL agent job or similar that could collect them once an hour and push them to a central separate location.

John

key thing, would be to ensure we aren’t using the scheduler to kick off SQL that monitors the scheduler 🤣

Christian

Need More Tools?

We purchased Datadog to replace several metric tools. Now we have seen the SQL metrics are a bit basic, it seems like we are concluding we need an alternative tool. I wonder if we will keep buying different metric tools over time and end up in the same situation we were in before!

You get some basic SQL monitoring in the base DataDog install (or the APM one, not sure which). You can pay extra for “enhanced” SQL monitoring in the DBM module. It’s still very basic and about 3x the cost of “proper” SQL monitoring tools. I’m sure as the DBM module matures it will get closer to fit for purpose, but right now it’s an incredibly expensive SQL Server monitoring tool that’s feature poor. If it was near zero cost, I’d have it everywhere to give a wider audience better visibility of what the DB layer does in live, but the features don’t currently justify the cost of that particular extra module.

Database Administrator

manager: can you draw me a pretty graph

pretty graph: